How much can one individual do to influence the output of a field?

There’s a very famous, though probably apocryphal, story about the Danish king Canute. As a prince he conquered and became ruler of England, and later also ascended to the Danish throne, being remembered in time as a wise and just – though not necessarily peaceable – ruler. As usually happens to anyone held in wide regard, he allegedly became surrounded by toadies and flatterers.

Unlike many whose heads are turned by such treatment (R Kelly, Kanye West, more than a few National Lottery winners), Canute instead is said to have provided a practical demonstration of how worthless their praises of his power were. Placing his throne on the beach in front of the incoming tide, he ordered the waters back. No surprises in relating what happened next, and with his shoes and leggings thoroughly sodden the pious ruler declared that the only true king was the big guy in the sky.

Whether true or not, it’s an example that scientists would do well to heed. No matter how powerful they may become, how successful they are in terms of their research, how well-funded their group is, there is a limit – and probably quite a low one – to the extent to which any individual can influence the behaviour of his/her colleagues. Whether this applies more or less in science than in other careers is hard to say, but that oh-so-precious independence and freedom that all scientists crave and trumpet probably plays a large part.

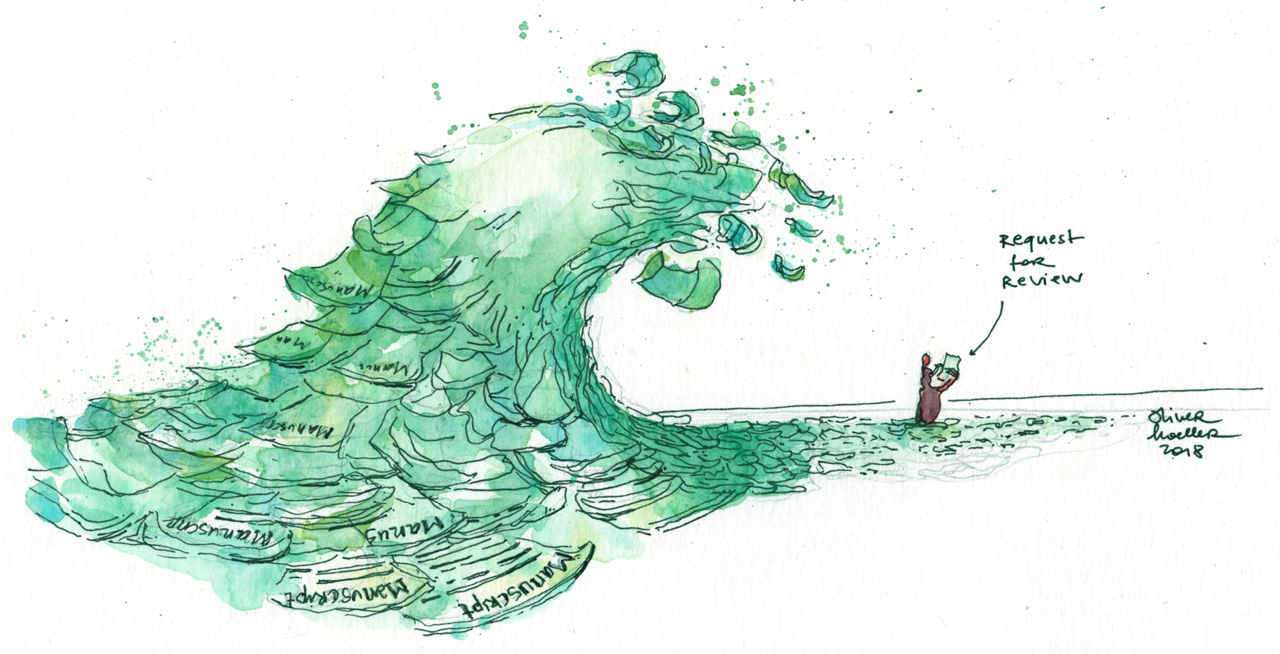

Reviewing papers possibly provides the best example. It’s rare indeed that you get asked to review a paper that can be immediately accepted, and the whole agony of reviewing revolves around the other 99% of requests that land in your inbox. Wonderful as the peer review process is in principle, there’s one trap that even the most experienced can readily fall into, namely of trying to make the authors do their work your way. Essentially, it’s an attempt to supervise others by proxy. Rather than reviewing the paper on its own merits, the reviewer imagines themselves the authors’ supervisor, and sets about trying to reimagine the story as if they themselves were attempting to publish it. As almost no two scientists will do things exactly the same way, even ones with unimpeachable standards, this easily lends to irritation at different kinds of controls being used, different assays being employed, different representations of the data…and that’s before coming to differences in interpretation.

However, irrespective of what kind of job the reviewer does, it’s by no means a given that the authors will necessarily implement any of those suggested changes, or that the editor will compel them to. And even if a reviewer encounters a paper that in his/her opinion is fatally flawed and unfit for publication, that opinion is extremely unlikely to prevent that story from eventually being published. Like a boxer that simply doesn’t know when to quit, it’s entirely possible to review the same paper on multiple occasions for different journals until eventually it gets accepted.

And once a paper is out, there’s a certain tendency to take its conclusions at face value, especially by people not directly working in the field and who are therefore unaware of its potential controversy. The bewildering panoply of techniques, intense specialisation, and sheer volume of published work make it difficult for most researchers to be expert outside their own small niches. Just as the placebo effect has been mysteriously intensifying over time, one could argue that blind trust in published material is on the increase, despite reproducibility being a concern for many fields. It’s possibly also another good reason why we should be extremely cautious of interpreting publications alone as the indicators of an individual scientist’s worth, and what simultaneously underpins much of the enthusiasm for negligently lazy proxies of quality such as the Journal Impact Factor.

So what’s an individual, even one with Canute-ish powers, to do in such a climate? You can try and enforce standards in your own work and in your assessments of others, but there’s really no guarantee that you’ll be heeded. You can spend a whole career in the reviewing trenches painstakingly pointing out the perceived deficiencies in others’ work (“This would benefit from more electron microscopy…”), but no matter what you do, that tide of data keeps coming in and you’re virtually powerless to influence it. It’s not possible for an individual to review every single paper being submitted for publication in a given area, even relatively small niches.

It’s not just physical and temporal limits that would preclude it. Authors are allowed, and often do, block specific scientists from reviewing their work on grounds of competition or bias. Block enough reviewers, and in a small enough field they may end up shutting out the people that are best qualified to assess the work. When your entire career may hinge on your ability to produce perceived high-impact (sigh) publications, it’s understandable if not fully excusable to try to get your friends rather than your critics to assess your work. An all-too-common upshot of such approaches, so human in their frailty, is that those experts become upset and then offended that their input is apparently being excluded. So the whispering starts, the catty questions at conferences, and the factionalisation of the field intensifies.

Does it matter, or is it another example of academic politics being so vicious because the stakes are so low? While the unsavoury nature of publication politics has led to more than one young scientist deciding that academia is not for them (in itself a tragedy, every single time), does it really matter if suboptimal work ends up in the published literature? In fact, it’s impossible to prevent it. They say that every football manager eventually gets fired, and the best ones simply accept that and focus on the job at hand instead of wondering how long they’ll be there for. In a similar way, all papers eventually get superseded (for both managers and papers there are exceptions, but so few as to prove the rule), so should we and the Canutes get worked up if imperfect research is published? Obviously it matters if the paper is fraudulent and there has been a deliberate attempt to deceive, but beyond that things get extremely blurry. Different people have different standards, and what is acceptable for one may be anathema for another. Plus, standards change over time, so what used to be acceptable now looks rather sloppy: there are plenty of genuinely classic papers out there with figures based on single examples, little to no quantification, and a much greater “trust me” element than would be possible nowadays. The growth and encouragement of multi/interdisciplinary papers may play a part too, as such publications can involve data of varying refinement depending on the particular experimental specialisations of the groups involved.

So what’s to be done? In terms of papers, clearly you can’t force people to do work exactly as you would have done, and there’s a limit to how much you can ask people to change. Either you get trigger happy with the “reject” button, or you learn to nudge effectively. But at the end of the day, you can’t author a paper you review, and going to the effort of producing a counter publication is often more trouble than it’s worth. Ultimately, communication has to be the solution. It is up to individual scientific communities to enforce standards, and those can only be understood and promoted in an environment that facilitates exchange and dialogue. An environment which is supportive of all its members, and one in which the review process is used as a chance to help improve others’ work, not to belittle them or hold them back.

If you want your field to have a reputation for doing good science, you can’t – you Canute – do it alone.

Join the FEBS Network today

Joining the FEBS Network’s molecular life sciences community enables you to access special content on the site, present your profile, 'follow' contributors, 'comment' on and 'like' content, post your own content, and set up a tailored email digest for updates.