We think of science as objective, but there are systematic biases that affect which research comes to our attention, and that can gravely distort our impression of a field.

One of these biases has been talked about a great deal: publication bias. In fact, this bias has two linked drivers: authors and journal editors. Suppose you are evaluating a series of compounds to see if they enhance apoptosis in a given cell type. After a long run of null findings, you find that compound X is effective. Many researchers would not bother to try and publish the null results – a bias that was christened the 'file drawer problem' by Rosenthal in 1979 – but would proceed to write up the results with X. And if they bucked the trend and decided to report the earlier null results, it is likely that the paper would be rejected as uninteresting. You may wonder why this is regarded as a problem. Surely the journals would get very dreary if they were full of null results? The problem arises because of the biasing effect of selective publication. Some compounds will give a significant effect purely by chance, and if we only hear about those, we may get an unrealistic rosy view of what is effective. This has been a recognized problem in clinical trials for years, which is why researchers are now required to register trials before starting a study. In addition, there is the issue of waste: if you spend a year testing compounds that don't work, but then tell nobody about it, other researchers may waste time exploring the same blind alleys.

On top of this we have another bias, confirmation bias, that is part of our human make-up. Psychologists have shown that we are much more likely to attend to and remember things if they fit with our preconceived ideas. It's generally good for the brain to be wired up to work this way – it allows us to focus on what is relevant and not expend effort processing every new piece of incoming information. It does, however, make it difficult to be a good scientist, which calls for us to explain rather than ignore evidence that contradicts our favourite theory. I've noticed this time and time again when writing introductions to my research papers. The articles that come to mind are ones that fit well with my world view. I tend to forget about those studies that obtained inconsistent or anomalous results. And the publication process encourages us to make an article attractive to readers by 'telling a story'. This doesn't mean making things up, but it does mean avoiding digressions, and not presenting the reader with a dump of incoherent facts. We have to be selective to be readable, but it is all too easy to omit the important but inconvenient truths.

When giving talks about biases, I sometimes ask audiences how morally acceptable it is to refrain from publishing null results, or fail to cite a relevant study that does not fit the story you want to tell. Most people regard these as minor peccadillos at worst. But in a cumulative science they can have serious consequences.

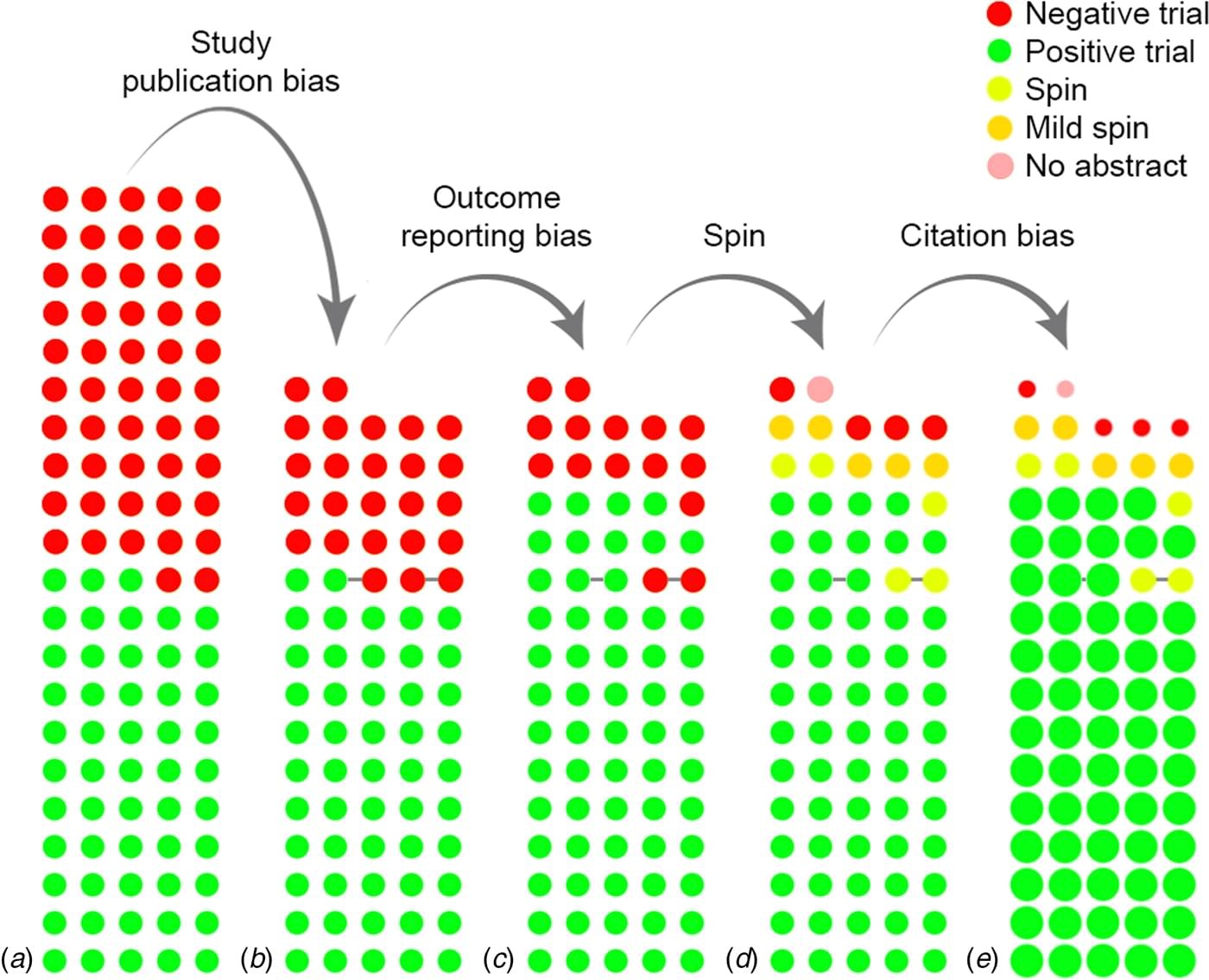

Consider this figure from a paper by de Vries et al. (2018). The dots in each bar correspond to a study; green for positive results and red for null results. The leftmost bar shows all the studies that were found on a clinical trial registry for testing an intervention for depression. In the rightmost bar, the size of the dot represents the number of citations received by that study. The difference between these bars is striking: we go from a situation where around 50% of studies show a positive effect to one where almost all studies do so. This study was only possible because trials in this field are registered, so we can see the unpublished studies: in most fields those studies are invisible, so our initial knowledge corresponds to the second left-hand bar, which already shows a rosy picture for the intervention. What happens next is the introduction of further bias, first by authors selecting from a range of measures only those that show positive outcomes, and second through spin, where the summary of a paper overhypes the findings. And if a study with null results somehow survives to make it into the literature, then it gets rapidly forgotten, as people find it uninteresting and fail to cite it.

Figure 1. See text for explanation. From de Vries, Y. A. et al. (2018) The cumulative effect of reporting and citation biases on the apparent efficacy of treatments: the case of depression. Psychological Medicine 48, 2453–2455, doi:10.1017/S0033291718001873. Licensed under CC BY 4.0.

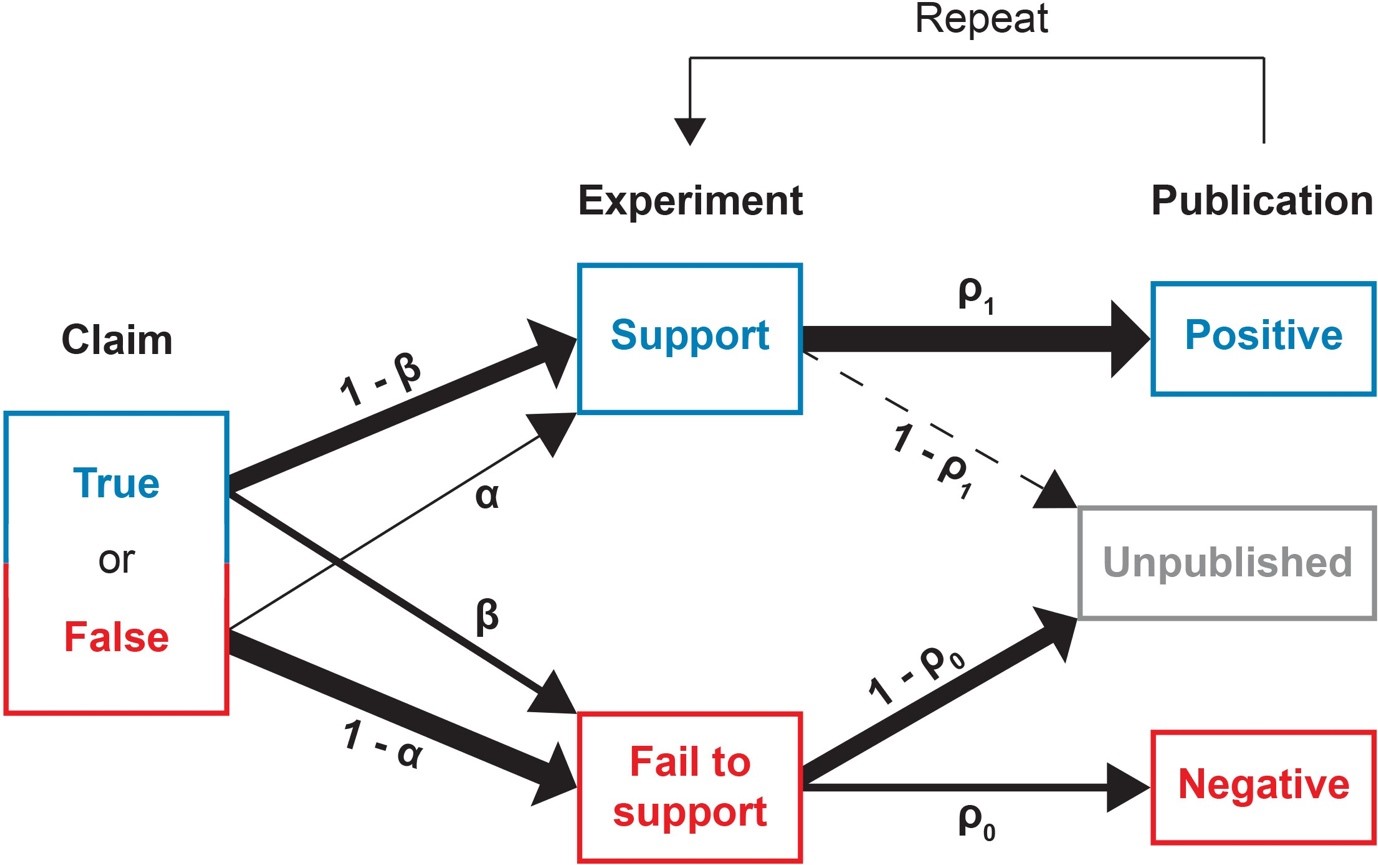

A theoretical analysis documenting this kind of process was carried out by Nissen et al. (2018), who showed the cumulative impact on the literature of publication bias in leading to 'canonization of false facts'. If we add citation bias into their model, such that published studies get ignored if they have null results, the convergence on an entrenched but wrong conclusion is even more rapid. It can then be very hard to overturn the conclusion, because we assume there is such strong evidence that we do not need to question it.

Figure 2. From Nissen, S. B., Magidson, T., Gross, K. and Bergstrom, C. T. (2016) Publication bias and the canonization of false facts. eLife, 5, e21451, doi:10.7554/eLife21451.001. Licensed under CC BY 4.0. True claims are correctly supported with probability 1−β while false claims are incorrectly supported with probability α. Positive results that support the claim are published with probability ρ1 whereas negative results that fail to support the claim are published with probability ρ0. This process then repeats, leading to evidence against a true claim disappearing from the literature over time if p0 is lower than p1.

What can be done about this? Because confirmation bias is so deeply rooted in our cognitive processes, scientists need to work actively to resist it. In theory, our peer review processes are designed to help us do just that, by having the work evaluated by someone who may not share our biases. But peer review typically comes at too late a stage in the research process, and a case can be made for moving it to a point before a lot of time and energy has been invested in data collection (see Bishop 2020 for more suggestions along these lines).

Some of our most iconic scientists are good role models for combating confirmation bias. Darwin had a habit of purposefully jotting down observations or thoughts that went against his theory, because he knew he would otherwise forget them. He explained how this helped him think through objections to his work, and so make a much stronger argument. We would all benefit from adopting a similar approach.

Further reading

Bishop, D. V. M. (2020) The psychology of experimental psychologists: Overcoming cognitive constraints to improve research. The 45th Sir Frederic Bartlett Lecture Quarterly Journal of Experimental Psychology, 73(1), 1–19, doi:10.1177/1747021819886519

Bishop, D. V. M. (2020) How scientists can stop fooling themselves over statistics. Nature, 584, 9, doi:10.1038/d41586-020-02275-8

Top image of post: by Andrew Martin from Pixabay

Join the FEBS Network today

Joining the FEBS Network’s molecular life sciences community enables you to access special content on the site, present your profile, 'follow' contributors, 'comment' on and 'like' content, post your own content, and set up a tailored email digest for updates.